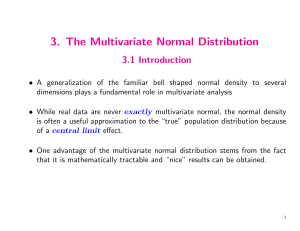

PCA Philosophy of PCA • Introduced by Pearson (1901) and Hotelling (1933) to describe the variation in a set of multivariate data in terms of a set of uncorrelated variables • We typically have a data matrix of n observations on p correlated variables x1,x2,…xp • PCA looks for a transformation of the xi into p new variables yi that are uncorrelated Philosophy of PCA • An orthogonal linear transformation transforms the data to a new coordinate system such that the greatest variance comes to lie on the first coordinate, the second greatest variance on the second coordinate and so on. Philosophy of PCA • The objectives of principal components analysis are - data reduction - interpretation • The results of principal components analysis are often used as inputs to - regression analysis - cluster analysis • Suppose we have a population measured on p random variables X1,…,Xp. Note that these random variables represent the p-axes of the Cartesian coordinate system in which the population resides. • Our goal is to develop a new set of p axes (linear combinations of the original p axes) in the directions of greatest variability: X2 X1 This is accomplished by rotating the axes. Consider our random vector X1 X 2 X = X p with covariance matrix S ~ and eigenvalues l1 l2 lp. We can construct p linear combinations Y1 = a'1 X = a11 X 1 + a12 X 2 + + a1p X p Y2 = a'2 X = a21 X 1 + a22 X 2 + + a2p X p Yp = a'p X = a p1 X 1 + a p2 X 2 + + a pp X p It is easy to show that Var Yi = a'iΣai, i = 1, ,p Cov Yi, Yk = a'iΣak, i, k = 1, ,p The principal components are those uncorrelated linear combinations Y1,…,Yp whose variances are as large as possible. Thus the first principal component is the linear combination of maximum variance, i.e., we wish to solve the nonlinear optimization problem source of nonlinearity max a'1Σa1 a1 st a'1a1 = 1 restrict to coefficient vectors of unit length The second principal component is the linear combination of maximum variance that is uncorrelated with the first principal component, i.e., we wish to solve the nonlinear optimization problem max a'2Σa2 a2 st ' 2 a a2 = 1 a'1Σa2 = 0 restricts covariance to zero The third principal component is the solution to the nonlinear optimization problem max a'3Σa3 a3 st a'3a3 = 1 ' 1 ' 2 a Σa3 = 0 a Σa3 = 0 restricts covariances to zero Generally, the ith principal component is the linear combination of maximum variance that is uncorrelated with all previous principal components, i.e., we wish to solve the nonlinear optimization problem max a'iΣai ai st ' i ' k a ai = 1 a Σai = 0 k < i We can show that, for random vector X with covariance ~ matrix S~ and eigenvalues l1 l2 lp 0, the ith principal component is given by Yi = e'iX = e'i1X1 + e'i2X 2 + + e'ip X p, i = 1, Note that the principal components are not unique if some eigenvalues are equal. ,p Generally, the ith principal component is the linear combination of maximum variance that is uncorrelated with all previous principal components, i.e., we wish to solve the nonlinear optimization problem max a'iΣai ai st ' i ' k a ai = 1 a Σai = 0 k < i We can show that, for random vector X with covariance ~ matrix S~ and eigenvalues l1 l2 lp 0, the ith principal component is given by Yi = e'iX = e'i1X1 + e'i2X 2 + + e'ip X p, i = 1, Note that the principal components are not unique if some eigenvalues are equal. ,p We can also show for random vector X with covariance ~ matrix S and eigenvalue-eigenvector pairs (l1 , e1), …, (lp , ~ ~ e~p) where l1 l2 lp, p σ11 + + σ pp = Var X i p = λ1 + i =1 + λp = Var Y i i =1 so we can assess how well a subset of the principal components Yi summarizes the original random variables Xi – one common method of doing so is λk p λ i=1 i proportion of total population variance due to the kth principal component If a large proportion of the total population variance can be attributed to relatively few principal components, we can replace the original p variables with these principal components without loss of much information! We can also easily find the correlations between the original random variables Xk and the principal components Yi: ρYi,Xk = eik λi σkk These values are often used in interpreting the principal components Yi. Example: Suppose we have the following population of four observations made on three random variables X1, X2, and X3: X1 1.0 4.0 3.0 4.0 X2 6.0 12.0 12.0 10.0 X3 9.0 10.0 15.0 12.0 Find the three population principal components Y1, Y2, and Y3: First we need the covariance matrix S: ~ 1.50 2.50 1.00 Σ = 2.50 6.00 3.50 1.00 3.50 5.25 and the corresponding eigenvalue-eigenvector pairs: 0.2910381 λ1 = 9.9145474, e1 = 0.7342493 0.6133309 0.4150386 λ2 = 2.5344988, e2 = 0.4807165 -0.7724340 0.8619976 λ3 = 0.3009542, e3 = -0.4793640 0.1648350 so the principal components are: Y1 = e'1X = 0.2910381X 1 + 0.7342493X 2 + 0.6133309X 3 Y2 = e'2X = 0.4150386X 1 + 0.4807165X 2 - 0.7724340X 3 Y3 = e'3 X = 0.8619976X 1 - 0.4793640X 2 + 0.1648350X 3 and the proportion of total population variance due to the each principal component is λ1 9.9145474 = = 0.777611529 17.0 p λ i i=1 λ2 2.5344988 = = 0.198784220 17.0 p λ i i=1 λ3 0.3009542 = = 0.023604251 17.0 p λ i i=1 Note that the third principal component is relatively irrelevant! Next we obtain the correlations between the original random variables Xi and the principal components Yi: ρY1,X1 = ρY1,X2 = ρY1,X3 = ρY2,X1 = ρY2,X2 = e11 λ1 σ11 e21 λ1 σ22 e31 λ1 σ33 e12 λ2 σ11 e22 λ2 σ21 0.2910381 9.9145474 = = 0.610935027 1.50 0.7342493 9.9145474 = = 0.385326368 6.00 0.6133309 9.9145474 = = 0.367851033 5.25 0.4150386 2.5344988 = = 0.440497325 1.50 0.4807165 2.5344988 = = 0.127550987 6.00 ρY2,X3 = ρY3,X2 = ρY3,X3 = e32 λ2 σ33 e23 λ3 σ22 e33 λ3 σ33 -0.7724340 2.5344988 = = -0.234233023 5.25 -0.4793640 0.3009542 = = -0.043829283 6.00 0.1648350 0.3009542 = = 0.017224251 5.25 We can display these results in a correlation matrix: Y1 Y2 Y3 X1 X2 X3 0.6109350 0.3853264 0.3678510 0.4404973 0.1275510 -0.2342330 0.3152572 -0.0438293 0.0172243 First vs second PC’s 0.5 X1 0.4 0.3 Y2 0.2 X2 0.1 0 -0.1 0 0.1 0.2 0.3 0.4 -0.2 0.5 0.6 0.7 X3 -0.3 Y1 The first principal component (Y1) is a mixture of all three random variables (X1, X2, and X3) The second principal component (Y2) is a trade-off between X1 and X3 First vs Third PC’s 0.35 X1 0.3 0.25 Y3 0.2 0.15 0.1 0.05 X3 0 -0.05 0 0.1 0.2 0.3 0.4 X2 0.5 0.6 -0.1 Y1 The third principal component (Y3) is a residual of X1 0.7 Merk Fuel Easy Service Value Price Design Sporty Safety Cons. Handling Audi 4 3 2 4 3 3 2 3 BMW 5 2 2 5 2 3 2 3 Citroen 3 4 4 3 4 4 4 3 Ferrari 5 3 2 6 2 1 3 4 Fiat 2 4 4 3 5 4 4 2 Jaguar 5 2 2 6 1 2 3 4 Lada 3 4 4 2 4 5 5 3 Mercedes 4 2 2 5 2 3 1 2 Opel 2 2 3 3 3 4 3 2 Rover 4 3 3 4 3 3 3 3 Trabant 4 5 6 2 4 6 6 3 VW 2 2 2 3 3 3 3 2 1 1 1 1 1 1 1 1 Best Value VW Opel Mercedes Fiat Audi PC 2 BMW Rover Citroen Lada Jaguar Ferari Trabant PC 1 Design Sport PC 2 Price Service Value Safety Easy Handling Fuel PC 1 VW Opel Mercedes Design Audi PC 2 BMW Jaguar Ferari Rover Price Service Fiat Sport Citroen Lada Value Safety Easy Handling Fuel PC 1 Trabant • The first principal component explain 76% of the variability of the data set. • The first two principal components explain more than 90% of the variability of the data set • The third PC explains only 5% of the variability. How many PC’s? • Data from Bryce and Barker on the preliminary study of possible link between football helmet design and neck injuries. Six head measurement were made of each subject. 1 EyeHD 0.8 0.6 0.4 EarHD 0.2 0 -0.2 -0.4 0 0.2 Wdim 0.4 Jaw FBEye 0.6 0.8 1 Circum Method 1 • The challenge lies in selecting an appropriate threshold percentage. • If we aim too high, we run the risk of including components that are either sample specific or variable specific. • Sample specific: a component may not generalize to the population or to other samples. • A variable-specific: component is dominated by a single variable and does not represent a composite summary of several variables. Method 1 Method 2 • Is widely used and is the default in many software packages. • It retains those components that account for more variance the average variances of the data Method 3 8 7 6 5 Eigenvalue • Plot eigenvalues vs. number of components • Extract components up to the point where the plot “levels off” • Right-hand tail of the curve is “scree” (like lower part of a rocky slope) 4 3 2 1 1 2 3 4 5 Component # 6 Method 3 Method 4 Method 4 Thank you